Congratulations to Yunqian who was selected as one of the Best Presentation Awards in IPIN 2024 for his paper

Projects

|

Text spotting with smartphone camerasWe are developing fast text spotting algorithms that can reliable detect and localize any text visible in images acquired by a smartphone camera. Qin, Manduchi. Research funded by CITRIS and NIH NEI. |

|

Geometric reconstruction of indoor environmentsIndoor environments are often characterized by simple geometric features such as lines and planes that are either parallel or orthogonal (Manhattan geometry). Our algorithms exploit the Manhattan geometry for robust structure and motion reconstruction. |

|

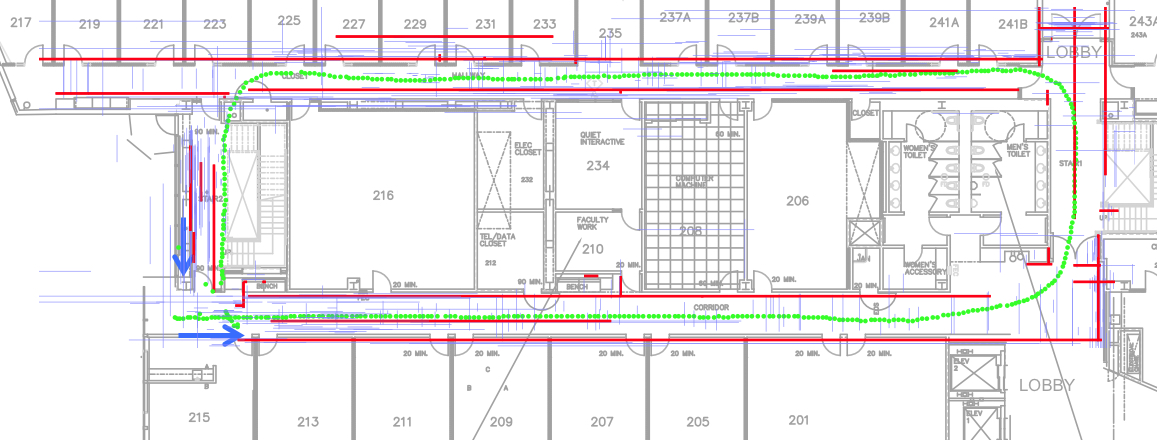

Blind indoor localization using inertial sensorsPersonal indoor localization has received a lot of attention in recent years. We are interested in simple techniques that use the intertial sensors embedded in a smartphone to help blind travelers localize themselves. However, the gait of blind walkers using a long cane or a dog is different than that of sighted walkers. We have collected an extensive data set of inertial data set (WeAllWalk) from blind walkers, and are developing robust algorithms for self-localization and safe return based on step counting and turn detection. Flores, Manduchi. Research funded by CITRIS. The WeAllWalk data set can be accessed here. |

|

Assisted mobile OCRMobile OCR apps allow blind people to access printed text. However, for OCR to work, it is necessary that an well-framed, well-resolved picture of the docume'nt is taken - something that is difficult to do without sight. We are developing computer vision-based mechanisms that can provide directions to a blind user in real time about where to move the phone to increase the chance to capture an OCR-readable snapshot of a document. Cutter, Manduchi. Research funded by NIH NEI. |

|

Accessible public transportationUsing public transportation may be challenging for those who cannot see or have cognitive impairments. We are developing a cloud-supported sensory infrastructure that will facilitate access to real-time information for travelers using public transit. Flores, Manduchi. Research funded by TRB and NSF. Press release here. ABC 7 news video here. |

|

Gaze-contingent screen magnificationPeople with low vision may need screen magnification to access a computer. We are developing algorithms for eye gaze-based control of screen magnification. Our system uses images from the camera embedded in the computer screen to detect and track the user's eye gaze. Kaur, Cazzato, Dominio, Manduchi. Research funded by Research to Prevent Blindness and Reader’s Digest Partners for Sight Foundation. Press release here. |

Completed Projects

|

(Computer) vision without sightMobile computer vision applications normally assume that the user can look through the viewfinder. But what if the user has no sight? This project aims to understand how a blind person can access information about the environment using mobile vision, and to identify the requirements of a mobile computer vision to support information access without sight. Manduchi. Work in collaboration with J. Coughlan of SKERI. Research funded by NIH NEI. |

|

High-efficiency color barcodesColor barcodes (e.g. Microsoft Tag) enable higher information density than regular 2-D barcodes. We are developing a new approach to increase the number of colors that can be used in a barcode (and thus the achievable information density) while ensuring robust information recovery via mobile vision. Bagherinia, Wang, Manduchi. Research funded by NSF. |

|

Wayfinding for blind persons using camera cell phonesWe have developed a system that allows blind persons to find their way in an unfamiliar environment using a regular camera cell phone. Locations of interest are labeled with specialized color marker that are detected quickly and robustly by the cell phone. A blind user can thus be guided through these landmarks to destination. Here you can download the software for color marker detection and design. Bagherinia, Gray, Manduchi. Work in collaboration with J. Coughlan of SKERI. Research funded by NIH and NSF. |

|

Reading difficult bar codes with cell phonesThere is a growing interest in cell phone apps that can read bar codes printed on products. Unfortunately, a number of factors (low resolution, motion blur, poor lighting) make bar code reading by cell phones a challenging problem. We have developed an algorithm for maximum likelihood bar code reading that outperforms all other published state-of-the-art techniques. Gallo, Manduchi. Research funded by NIH and NSF. |

|

Environment exploration using a virtual white caneThe long cane is the most widely used mobility tool for blind people. It allows one to extend touch and to "preview" the lower portion of the space in front of oneself. We are designing laser-based hand-held devices that enable environment exploration without the need for physical contact. Using active triangulation, our devices can identify obstacles and other features that are important for safe ambulation (such as steps and drop-offs). Ilstrup, Yuan, Manduchi. Research funded by NSF. |

| |

High-dynamic-range ImagingFor scenes characterized by a range of irradiance values that is too large to be captured with a single shot, one can resort to High-dynamic-range Imaging (HDR). A common strategy is to capture a stack of differently exposed pictures and combine them into an HDR image. We investigate different stages of this pipeline, from radiometric calibration, to the weighting schemes used in the combination, to the correction of artifacts such as ghosting in the final image. Gallo, Manduchi. Work in collaboration with M. Tico, N. Gelfand, and K. Pulli of the Nokia Research Lab, Palo Alto. Research funded in part by Nokia. |

|

Video surveillance using an ultra-low-power contrast-based camera nodeEnergy consumption is a critical constraint for wireless camera networks. We have designed and implemented a fast recognition algorithm on a self-standing node based an ultra-low-power contrast-based camera and a Flash FPGA processor. The whole node consumes lass then 10 mW for image acquisition and processing. Gasparini, Manduchi, Gottardi. Research in collaboration with the Fondazione Bruno Kessler, Trento, Italy. |

|

Viewpoint Invariant Pedestrian RecognitionRecognizing people in images and video is one of the most fundamental problems in computer vision. We focus on matching images of pedestrians from single image frames of different pose and viewpoint. Our approach focuses on finding methods of comparing pedestrian images which are invariant to elements not associated with the persons identity. Gray, Brennan, Tao |

| |

Efficient image representation using Haar-like featuresThe efficient and compact representation of images is a fundamental problem in computer vision. In this project, we propose methods that use Haar-like binary box functions to represent a single image or a set of images. A desirable property of these box functions is that their inner product operation with an image can be computed very efficiently. We show that using this efficient representation, many vision appliations can be significantly accelerated, for example: template matching, image filtering, PCA project, image reconstruction. Tang, Crabb, Tao |

| |

Co-tracking using semi-supervised support vector machinesWe treat tracking as a foreground/background classification problem and propose an online semi-supervised learning framework. Classification of new data and updating of the classifier are achieved simultaneously in a co-training framework. Experiments show that this framework performs better than state-of-the-art tracking algorithms on challenging sequences. Tang, Brennan, Tao |