Congratulations to Yunqian who was selected as one of the Best Presentation Awards in IPIN 2024 for his paper

Towards Semantic Spatial Awareness: Robust Text Spotting for Assistive Technology Applications

Sponsor |

Status |

Institutions |

| Center for Information Technology in the Interest of Society (CITRIS) | Completed | UC Santa Cruz (UCSC); UC Merced (UCM) |

Investigators |

Graduate students |

Undergraduate students |

| R. Manduchi, UCSC; S. Carpin, UCM | M. Cutter, UCSC; G. Erinc, UCM | K. Locatelli, UCM |

Introduction

The ability to move independently is a critical component of a person’s quality of life. For many people, however, independent mobility is hampered by one or more forms of disability: motor, cognitive, or sensorial. For example, blind persons experience difficulty moving and finding their way in a previously unexplored environment, due to lack of visual access to features that sighted persons use for self-orientation. Likewise, some wheelchair users may find it challenging or impossible to safely and reliably control their wheelchair due to poor upper limbs control or to cognitive impairment.

The long-term goal of this project is to develop computer vision technology to provide assistance to both categories of users mentioned above: persons who, due to visual impairment, experience difficulty self-orienting, and persons with mobility impairment who are unable to independently control the trajectory of their motorized wheelchair. Specifically, we will develop technology that promotes semantic spatial awareness in man-made environments by means of direct access to printed textual information.

The ability to assign semantic labels to places brings forth new intriguing opportunities for autonomous robotic navigation. Rather than dealing with a simple binary space (occupied/free), commands could be given in terms of high-level terms, and environment-specific navigation modules could be selected at run time. By accessing already existing semantic labels (which are normally placed for use by sighted humans), there is no need to instrument the environment, and thus costly infrastructure updates are avoided. This approach, which only recently started receiving attention by the robotic community, has profound implications for the way robots can interact with the human environment.

Text access by optical character recognition (OCR) is a mature technology. However, OCR was designed for use with “clean”, well-resolved images of text – typically from printed paper that was optically scanned. Recent years have witnessed a growing interest by the computer vision community in algorithms for “text spotting”, that is, text detection before it can be read by OCR, even when the imaging conditions are far from ideal (low resolution, poor exposure, motion blur). Text spotting can be performed very fast (at frame rate), an important characteristic when processing the video stream from a moving camera. The ability to identify from afar a region in image space that may contain readable text can trigger proactive exploration strategies aiming to extract richer semantic information from the environment. In the case of a blind person using their smartphone to search for signs (e.g., a certain office in a building), text spotting can provide real-time feedback (in acoustic or tactile form). The user is thus prompted to move closer to the area that has been detected as containing text, in order to get a better resolved image which can then be decoded and read aloud via OCR and text-to-speech.

Text spotting

We use a variation of the well known stroke width transform algorithm to detect individual text characters. The original stroke width transform paper primarily used the stroke width variance to classify potential letters. In our implementation, candidate letters are validated through a letter classifier, implemented as a random forest on specific image features. The first four features are inspired by the work of Neuman and Matas: the number of horizontal crossings, the number of holes within the region, the pixel area perimeter ratio, and the extent of region. In addition, we also consider the stroke width of a letter divided by its height, and the variance of the stroke width values.

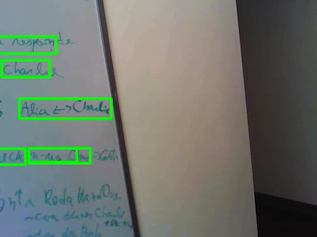

Chains of letters that occur spatially next to each other are hypothesized as words and are then validated based on a number of heuristics. We enhanced the second stage word validation step of the text localization algorithm by combining three word-level features. The first feature, centroid angle, originally proposed by Neumann and Matas, is a powerful cue for word validation since words are normally oriented consistently. Another word-level feature is from Tsai et al., and measures the number of pixels belonging to letters divided by the area of the convex hull of the region bound by the hypothesis word. This feature represents the extent of the word and greatly reduces the number of false positives. Another common source of spurious false positives in word detection are objects which have consistent stroke width, and may thus be misclassified as sequences of letters. These false words are normally characterized by repeated patterns such as blinds, crosswalks, and decorations. In order to mitigate the number of false positives caused by repeating noise, inspired by Tsai et al., again we check the autocorrelation of each letter compared to its adjacent neighbors. If too many of the letters are similar, we reject the word. Once a text segment has been detected, it is read by an open sourve OCR (Tesseract)

|

|

Navigation

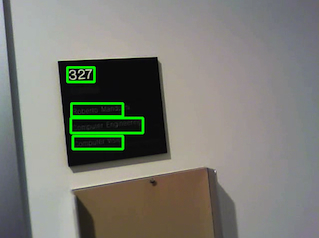

UCSC's text spotting code has been implemented and tested on a mobile robot at UCM, with the objective of demonstrating text discovery in an indoor environment. The robot is a Pioneer P3AT equipped with a SICL LMS-200 range finder and a stereocamera (Bumblebee 2). The control software is written in C++ and is based on Player and OpenCV.

The robot is equipped with the occupancy map of the environment, the text to be searched, and a set of approximate prior areas for the location of the text (defined as rectangular areas in global coordinates). The robot's initial position is unknown; it starts by attempting to localize itself based on laser range data using the AMCL algorithm (particle filter) provided in Player. Once it localizes itself, the robot begins moving towards the closest area in the set of priors. When it reaches the area, it begins to randomly explore the environment.

While the robot is running, an image processing pipeline is executed, which turns the stereo camera data into goal candidates. Images are initially processed by the text spotter, which produces bounding boxes for potential text regions. These regions are merged together based on depth information and turned into candidate goals, characterized by the orientation and position of the potential region of text. Existing candidates are updated by subsequent text spotter iterations; they are rejected if they do not re-appear (which typically indicates that the candidate did not represent a valid region of text). Candidates are used by the goal seeking process only after they have been seen in a certain number of consecutive image frames.

Once the robot detects a candidate inside the prior area towards which it is moving, it stops random exploration and begins navigating towards the candidate. At this point the main thread begins running OCR on the captured images. OCR uses only the regions provided by the text spotter for computational efficiency, and merges results from multiple images to minimize error and determine whether the candidate matches the current label. The robot navigates to a position in front of the label at a distance of approximately 2 meters, and decides whether the text is a match based on the results of OCR. If the text is is equal to the known target text, the robot assumes the label has been found. Otherwise, the robot labels the prior area as visited and restarts the proces moving towards the closest unvisited prior area. This video shows an example of navigation with text discovery. This video shows an example of navigation with text discovery.